I’ve been long dreaming of connecting these to important technologies and run them in way that I actually understand. I’m not good at Android programming (for those cardbox VRs) and not really good at Unity (although that would be fun to dive deeper) and somehow my driver situation with the DK2 and windows 10 deteriorated.

There have been earlier attempts to run the Oculus on a Pi, but in my eyes the latest iteration I found is the most fitting to me (including Python bindings and 3d libraries).

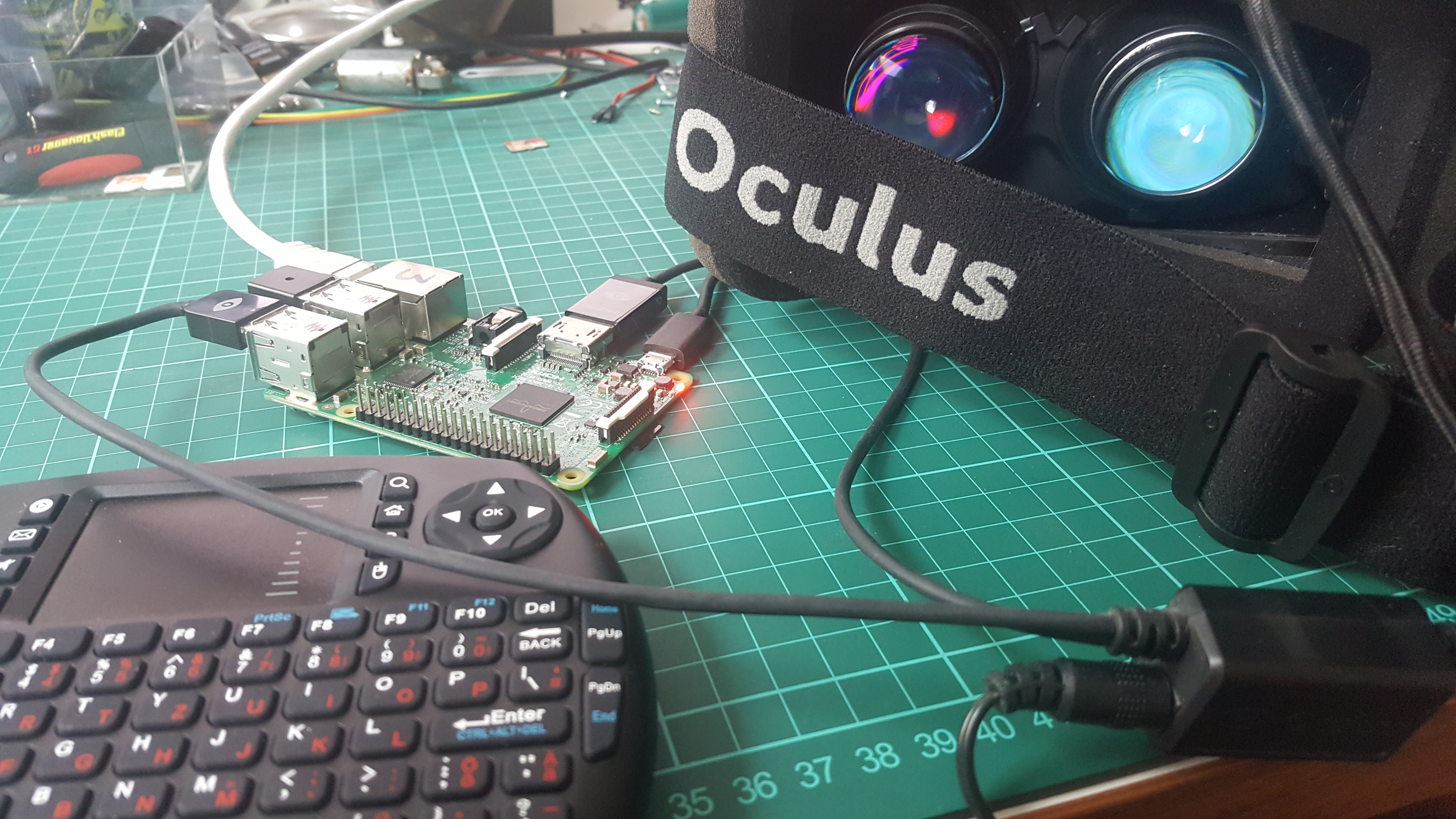

So recently, I came across Wayne Keenan’s blog how actually did exactly what I’m dreaming of, namely to run the DK2 controlled by the Pi3. I’m not so much interested in maximising the complexity of geometry in VR but really more in the interaction design and what untethered VR (or even AR) can actually feel like.

His github recipe really works nicely, and so the installation was really a matter of 30min:

The installation will also change the display configuration to show the Pixel Desktop in the DK2. However, that view is not stereo, i.e. the desktop spans over both eyes. Connecting the DK2 before installing the display config will result in a orange/blue blinking LED on the goggles.

The demos, Wayne is giving are started in the terminal, so you first have to actually find a way to start the terminal, I think it is the ALT key then you can navigate. I really love the little keyboard because you can peek from the goggles onto your keys. However, I’d prefer to have a little video window showing a live cam underneath my goggle that shows my keyboard just like this gentleman did with RiftSketch:

however, this is running in the browser and languages / concepts I’m not familiar with. You can try it out here.

Still it would be cool to ditch the Pixel desktop alltogether and really run in an environment in the rift itself. With Wayne’s python implementation, I could imagine to write a little “shell” that would allow me to write shell commands in cyberspace and execute them using pythons way to exectue shell commands. In this way, one could easily switch between the demos, I hope.

RiftSketch is also very cool in that you can write code and execute it in your 3d environment to see effects immediately. For this, the Pi3 should definetly be fast enough.

Finally, it would be cool to have the Asus XTion (Microsoft Kinect like depth camera) scanning point clouds that I can see in real time. There have been successful attempts to use the Kinect with the Pi2:

I guess, I’d need a second Pi3 for recording the point clouds and somehow “stream them over to the Pi for the Rift, fortunately, somebody did a tutorial on how to set up the Kinect V1 on a Pi, let’s see whether that’s as easy as the DK2 on the Pi today. Adventures ahead!