Short-cut: for instructions how to run a server on the Quest for developing a-frame webVR apps that run on the Quest, scroll down to how-to:

Background: Reading William Gibson’s Neuromancer as a child, I was always intrigued by how to get, stay and work in “the matrix” / cyberspace. Today, VR is still not where Gibson conceptualised it (we don’t have meaningful and conventionalised data visualisation and navigation in 3d yet), but still we’re making baby steps. When I first saw this video in 2014, I fell in love with the idea to write live updating code in VR:

Inspecting the code today, it isn’t built in a-frame or webVR as far as I understand, but in threeJS which afaik underlies webVR. It’s not hosted anywhere anymore, so you can’t try it out on your headset.

Although, having owned the DK2 and the CV1, the Oculus Quest finally is the device of my dreams as it doesn’t depend on external sensor setups, cables or a PC. For the moment, I don’t care about the resolution and the GPU. I want to explore the idea of having a fully fledged computer on your body to work with. It’s really a new device category. Right now, content and software is developed outside HMD and then tested on them (think Unity 3d etc), even for phones, this is the case, you can’t develop for Android in Android without anything else (at least last time I checked). From my perspective (I grew up with the Commodore C64), the computer to consume on should be the computer to produce on.

The Quest is a computer, it has a huge screen, it has an OS, internet connection, it runs Android and is bluetooth capable. I came across a-frame and think that for the moment, this is the go-to development framework compared to Unity 3d et al. However, to develop for a-frame, you also need a web-server (although systems like glitch.me and pencode allow you to work in the browser). Thus, ideally this server itself should also run on the Quest (my ideal is self-contained).

Here is a little how-to:

- [this post assumes that you have enabled the developer mode and are capable of sideloading via ADB or sidequest].

- Keyboard: I successfully paired a bluetooth keyboard (using a sideloaded bt lister app, this one works on the Quest, others didn’t) and it works in the Oculus Browser but not in the (side loaded) Firefox Reality browser (current version in Beta: 1.2.3, use *oculusvr-arm64-release-signed-aligned.apk for the Oculus Quest, start it from “unknown sources”). It also works in termux (see below).

- [Optional: I successfully can work in glitch in the Oculus Quest browser (here’s something I composed from two other glitches plus some of my own code: https://glitch.com/~gallery-appear-disappear use grip button on all the objects, triceratops will produce boxes with physics, sphere will change environment, resize the picture using both controllers). Note: You can leave out this step but it’s nice to see that you can work on code in a VR browser and experience it in the same browser.]

- I successfully sideloaded termUX (it “contains” / “makes accessible” Linux in Android and interfaces with the hardware, read here) and can run it in the OculusTV environment (bigger screensize would be nice though).

- In termUX (keep it up to date by issueing:

apt-get updateandapt-get upgrade), we should install:pkg install nano(cmdline texteditor)pkg install python(in some cases neededpkg install nodejs(node js and npm)pkg install git(for pulling git repositories)

- Next, we install a-frame by issueing

git clone https://github.com/aframevr/aframe.gitand change to that directory - We install the package by

npm install - And we start

npm start

It takes a while for the system to put together the server and start it, finally it will print something like “server started at http://192.168.178.xx:9000”, call that from your Oculus Browser (or Firefox Reality) and voilá:

Certainly, it is still a bit cumbersome to change between termUX (that is to be called via OculusTV) and the browser and yes, nano is not the perfect tool to work on java script and HTML, BUT: we have it working! A self-contained system running on the Oculus Quest for developing a-frame / webVR applications.

Some more references:

- node.js on Android: https://www.freecodecamp.org/news/building-a-node-js-application-on-android-part-1-termux-vim-and-node-js-dfa90c28958f/

- npm & a-frame: https://www.npmjs.com/package/aframe

Other experiments:

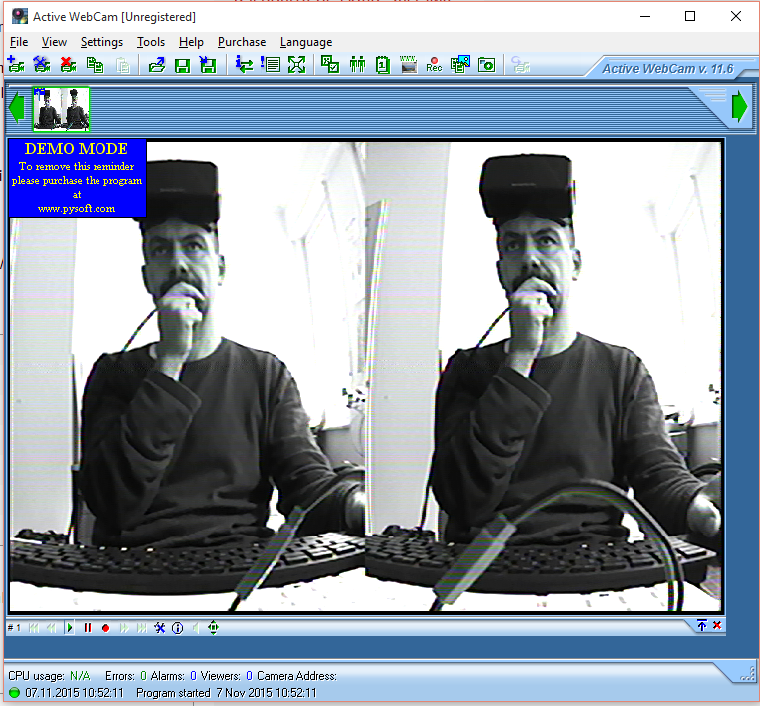

- I was able to connect a webcam using OTG with my phone and found an app in the Google Playstore that actually can stream video on the phone’s screen. But sideloading it to the Quest and starting it there doesn’t deliver a live stream. Intention is to look at my keyboard in VR)

- I found some webVR code that can use the webcam as texture on an object. It works on my PC but not on the Quest (neither Oculus Browser nor the Firefox Reality although the latter has an enable button for webcams)

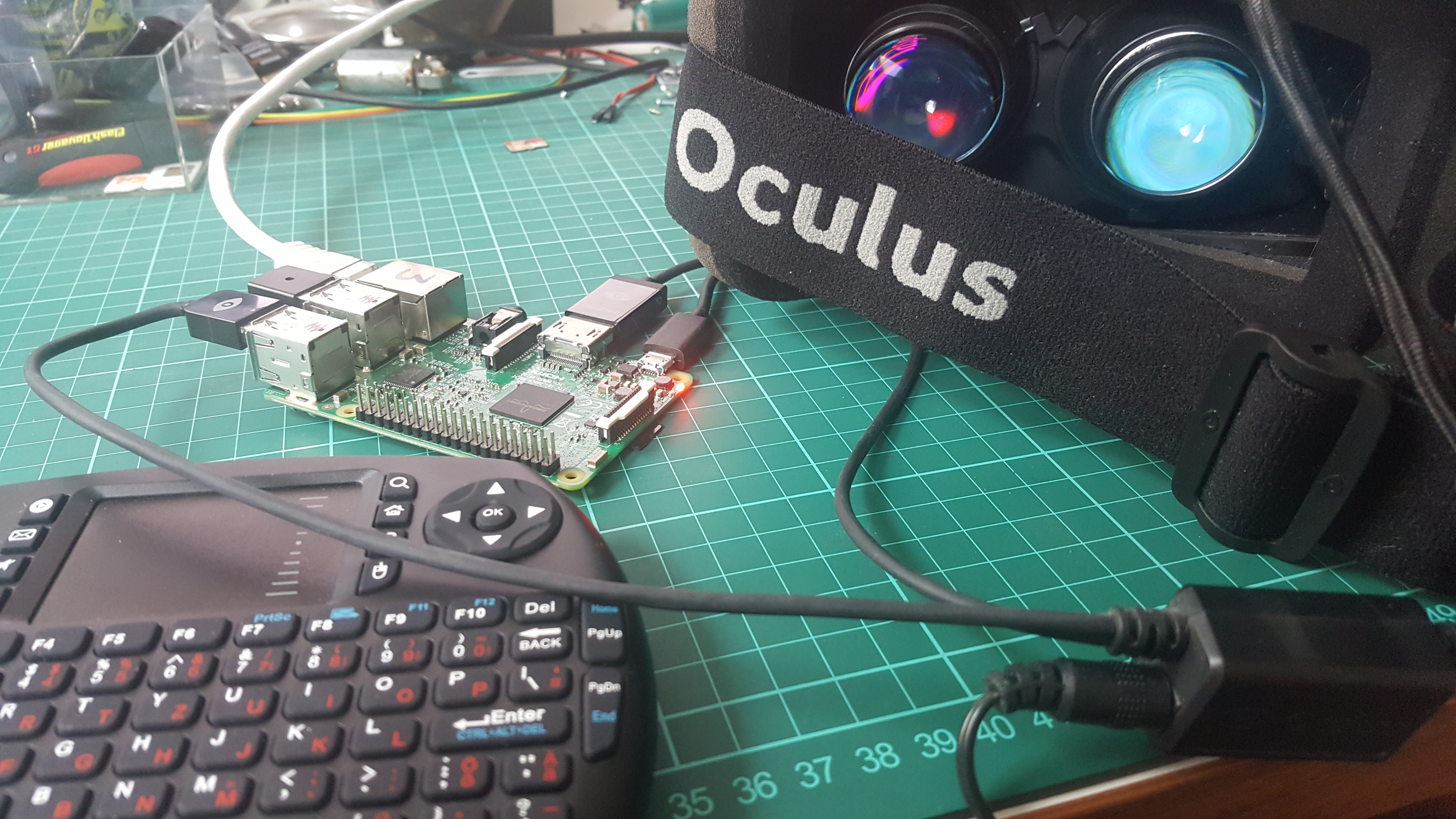

- I installed OVRVNC to login to my Raspberry Pi, connect a webcam to that and stream video from there and run a webserver. However, on the Quest it doesn’t connect to my Pi VNC session from a PC works.